Automating Memory Leak Detection: Improve Your Dockerized CI Pipelines Now

Memory leaks lurk in the shadows of the software industry, acting as silent saboteurs. These insidious flaws stem from improper memory management, gradually consuming system memory. As memory depletes, performance withers away, and in extreme cases, the system crashes. Detecting and rectifying these memory leaks is akin to finding a needle in a software haystack and remains one of the most challenging aspects of coding. However, the necessity for such detection cannot be overstated.

Rest assured, the roadmap laid down here applies universally, not confined by the boundaries of your project’s technology stack. We will use Express.js, React.js, and Playwright as illustrations, yet the guiding principles transpose to any technological ensemble.

Explore the sample repository on Github: Memory Leak Detection in Dockerized E2E pipelines

Prerequisites

Before we march into the domain of memory leak detection, it’s crucial to ensure we are armed with the right tools. Your toolkit for this expedition should include the following:

- A Dockerized Environment: Detecting memory leaks is simplified within the bounded spaces of Docker containers. Having your project Dockerized allows for precise control and monitoring of memory usage, making leak detection more manageable.

- CI Workflow: A Continuous Integration (CI) system, such as GitHub Actions, is pivotal for orchestrating our operations. Ideally, this system should enforce checks against each pull request, making a successful pass a requirement for merging. This setup optimizes our chances of catching memory leaks early, before they seep into the main codebase.

- Simulation of Complex User Interactions: Unmasking potential memory leaks requires complex interactions with your application, mirroring real user behavior. An automated E2E testing pipeline can effectively achieve this, though any mechanism simulating genuine user interactions will suffice.

Quick Demo

Let’s set the stage for a live demonstration, where we will intentionally sabotage an express app with a memory leak and witness the consequences. Our plot involves a global variable, incrementally inflated each time a specific route is targeted. Once the E2E test pins down this route, the memory leak springs into action.

import express from "express";

const app = express();

const port = 5000;

let leaks = [];

app.get("/", (req, res) => {

for (let i = 0; i < 10000; i++) {

leaks.push(new Array(1000).join('x'));

}

res.send(`Memory leak page. Current leaks length: ${leaks.length}`);

});

app.listen(port, () => {

console.log(`Server is running on http://localhost:${port}`);

});As we ignite this process, we witness an alarming escalation. The memory usage catapults by a staggering 500% during the E2E tests, flashing the red signal of a probable memory leak, leading to a crash in the pipeline.

On the flip side, if we rollback that modification, memory usage remains steady, and our pipeline sails through without a hitch, a testament to the power of careful memory management.

E2E Test Pipeline: The Orchestrator

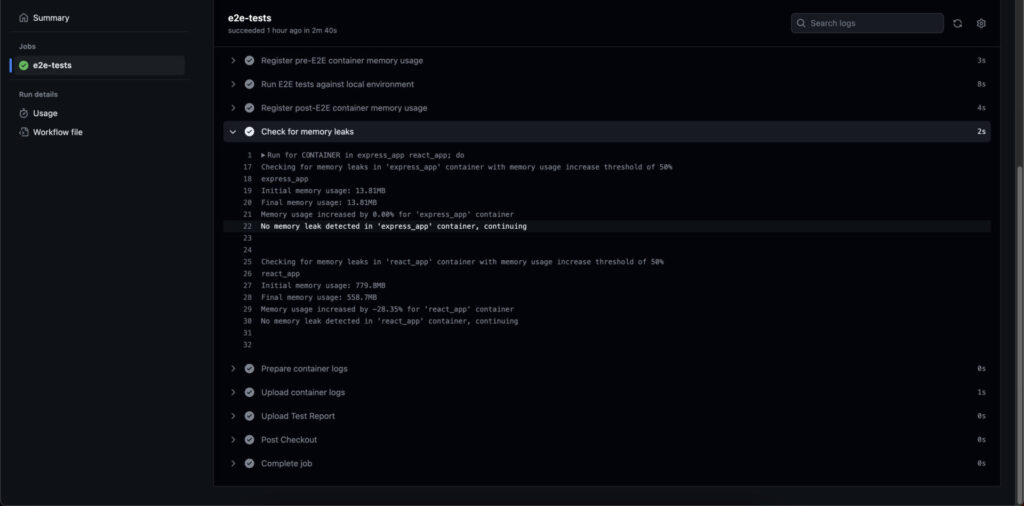

The e2e-tests.yml workflow file plays the maestro in our memory leak detection orchestra. It sets the stage by taking a snapshot of our Docker containers’ initial memory usage before the E2E tests start their act. Once the performance wraps up, it records the closing memory usage. Our script checkForMemoryLeaks.ts then steps in to scrutinize these two readings.

# Define the name of the workflow.

name: E2E Tests

# Define the events that trigger the workflow.

on:

# Run the workflow when a pull request is made to the "main" branch.

pull_request:

branches: [main]

# Allow running the workflow manually from the GitHub Actions tab.

workflow_dispatch:

# Define global environment variables that will be used in this workflow.

env:

# Define the container names that will be tested for memory leaks.

CONTAINERS_TO_MEM_CHECK: "express_app react_app"

# Define the container names that will have their logs captured as artifacts.

CONTAINERS_TO_LOG: "express_app react_app playwright_tests"

# Define the jobs in this workflow.

jobs:

e2e-tests:

# Run this job on the latest Ubuntu (Linux) runner hosted by GitHub.

runs-on: ubuntu-latest

# Limit the execution time of this job to 15 minutes.

timeout-minutes: 15

# Define the steps in this job.

steps:

# Checkout the this repository.

- name: Checkout

uses: actions/checkout@v3

# Build and start the "express_app" and "react_app" containers in detached mode to avoid blocking the workflow.

- name: Start local environment with Docker Compose

run: docker compose up -d

# Build the Docker image for E2E tests.

- name: Build E2E Docker image

run: docker build -t playwright_tests ./e2e

# ts-node is required to run the checkForMemoryLeaks script.

- name: Install ts-node

run: npm install -g ts-node

# Will be used when checking for memory leaks.

- name: Register pre-E2E container memory usage

run: |

for CONTAINER in ${{ env.CONTAINERS_TO_MEM_CHECK }}; do

MEMORY_USAGE=$(docker stats --no-stream --format '{{.MemUsage}}' $CONTAINER | awk '{print $1}')

echo "Pre-E2E memory usage for $CONTAINER: $MEMORY_USAGE"

# Store the initial memory usage of each container as an environment variable.

echo "${CONTAINER}_INITIAL_MEMORY_USAGE=$MEMORY_USAGE" >> $GITHUB_ENV

done

# Execute the E2E tests

- name: Run E2E tests against local environment

run: docker run -v $(pwd)/e2e-report:/app/e2e-report --name playwright_tests --network=host playwright_tests yarn e2etest:ci

# Will be used when checking for memory leaks.

- name: Register post-E2E container memory usage

run: |

for CONTAINER in ${{ env.CONTAINERS_TO_MEM_CHECK }}; do

MEMORY_USAGE=$(docker stats --no-stream --format '{{.MemUsage}}' $CONTAINER | awk '{print $1}')

echo "Post-E2E memory usage for $CONTAINER: $MEMORY_USAGE"

# Store the final memory usage of each container as an environment variable.

echo "${CONTAINER}_FINAL_MEMORY_USAGE=$MEMORY_USAGE" >> $GITHUB_ENV

done

# Fail the workflow if any of the containers have memory leaks.

- name: Check for memory leaks

run: |

for CONTAINER in ${{ env.CONTAINERS_TO_MEM_CHECK }}; do

INITIAL_MEM_USAGE="${CONTAINER}_INITIAL_MEMORY_USAGE"

FINAL_MEM_USAGE="${CONTAINER}_FINAL_MEMORY_USAGE"

# Use the checkForMemoryLeaks script to check for memory leaks in each container.

ts-node ./scripts/checkForMemoryLeaks.ts $CONTAINER ${!INITIAL_MEM_USAGE} ${!FINAL_MEM_USAGE}

done

# Prepare the logs for all containers.

- name: Prepare container logs

if: always() # Ensure logs are captured, even if the tests fail.

run: |

# Create the "logs" directory

mkdir -p logs

# Export each Docker container's logs to files in the "logs" directory.

for CONTAINER in ${{ env.CONTAINERS_TO_LOG }}; do

docker logs $CONTAINER >& logs/$CONTAINER.log

done

# Upload the logs for all containers as an artifact.

- name: Upload container logs

if: always() # Ensure logs are captured, even if the tests fail.

uses: actions/upload-artifact@v2

with:

name: E2E Logs

path: logs

# Upload the test report and video recordings as an artifact.

- name: Upload Test Report

if: always() # Ensure test report is captured, even if the tests fail.

uses: actions/upload-artifact@v2

with:

name: E2E Test Report

path: e2e-reportcheckForMemoryLeaks.ts Script: The Leak Detector

The script checkForMemoryLeaks.ts, a Node.js piece composed in TypeScript, is our detailed analyst, comparing the opening and closing memory usage of a Docker container. If the final reading shoots past a certain threshold percentage (50% in this illustration), the script raises the alarm, suspecting a memory leak and grinding the process to a halt. If the increase falls within acceptable limits, the script breathes a sigh of relief, signaling no memory leak detection.

For its investigation, the script needs three clues from the command line: the Docker container’s name, its opening, and closing memory usage.

const MEMORY_LEAK_PERCENTAGE_THRESHOLD = 50;

// If input is '12.34MiB', then return 12.34

// If input is '1.234GiB', then return 1263.616 (GB value in MB)

async function formatMemoryUsage(rawUsage: string): Promise<number> {

// Remove non-numeric characters from memory usage string (except for '.')

const containerMem: string | undefined = rawUsage.replace(/[^\d.]/g, "");

const usage: number = parseFloat(containerMem);

const isInGB: boolean = containerMem.includes("GiB");

if (isInGB) return usage * 1024;

return usage;

}

async function checkForMemoryLeaks(

container: string,

rawInitialMemUsage: string,

rawFinalMemUsage: string,

): Promise<void> {

const initialMemUsageMB = await formatMemoryUsage(rawInitialMemUsage);

const finalMemUsageMB = await formatMemoryUsage(rawFinalMemUsage);

console.log(

`Checking for memory leaks in '${container}' container with memory usage increase threshold of ${MEMORY_LEAK_PERCENTAGE_THRESHOLD}%`,

);

console.log(container);

console.log(`Initial memory usage: ${initialMemUsageMB}MB`);

console.log(`Final memory usage: ${finalMemUsageMB}MB`);

const memoryUsageIncreaseMB = finalMemUsageMB - initialMemUsageMB;

const increasePercentage = (memoryUsageIncreaseMB / initialMemUsageMB) * 100;

const increasePercentageRounded = increasePercentage.toFixed(2);

console.log(

`Memory usage increased by ${increasePercentageRounded}% for '${container}' container`,

);

if (increasePercentage > MEMORY_LEAK_PERCENTAGE_THRESHOLD) {

console.error(

`Possible memory leak detected in '${container}' container, aborting process!\n\n`,

);

process.abort();

} else {

console.log(

`No memory leak detected in '${container}' container, continuing\n\n`,

);

}

}

const container = process.argv[2];

const initialMemUsage = process.argv[3];

const finalMemUsage = process.argv[4];

checkForMemoryLeaks(container, initialMemUsage, finalMemUsage);Limitations

While this approach proves skilled at unmasking grave memory leaks, it’s not without its blind spots. Understanding these limitations helps us grasp the intricacies at play and how best to navigate them:

- False Alarms: The script can only guess if memory usage rises past a certain limit. It cannot decisively discern whether the surge is due to a memory leak or merely increased data processing. As a result, there might be instances of false positives (mistaking normal operations as a leak) or false negatives (overlooking real leaks).

- Walking the Tightrope of Memory Threshold: Fixing the right memory usage increase threshold is like treading a thin line. A high threshold might neglect minor leaks, while a low one could trigger frequent false alarms.

- Memory Management Variability: Docker containers’ memory management differs based on your application’s technology stack, making this method not universally applicable.

Memory leaks, crafty and destructive, act as software’s silent disruptors. However, armed with the right strategies and tools, they aren’t invincible. This tutorial, hopefully, has endowed you with the knowledge to leverage GitHub Actions and Docker to confront memory leaks directly, enhancing your software’s resilience.